Model Deployer Launches!

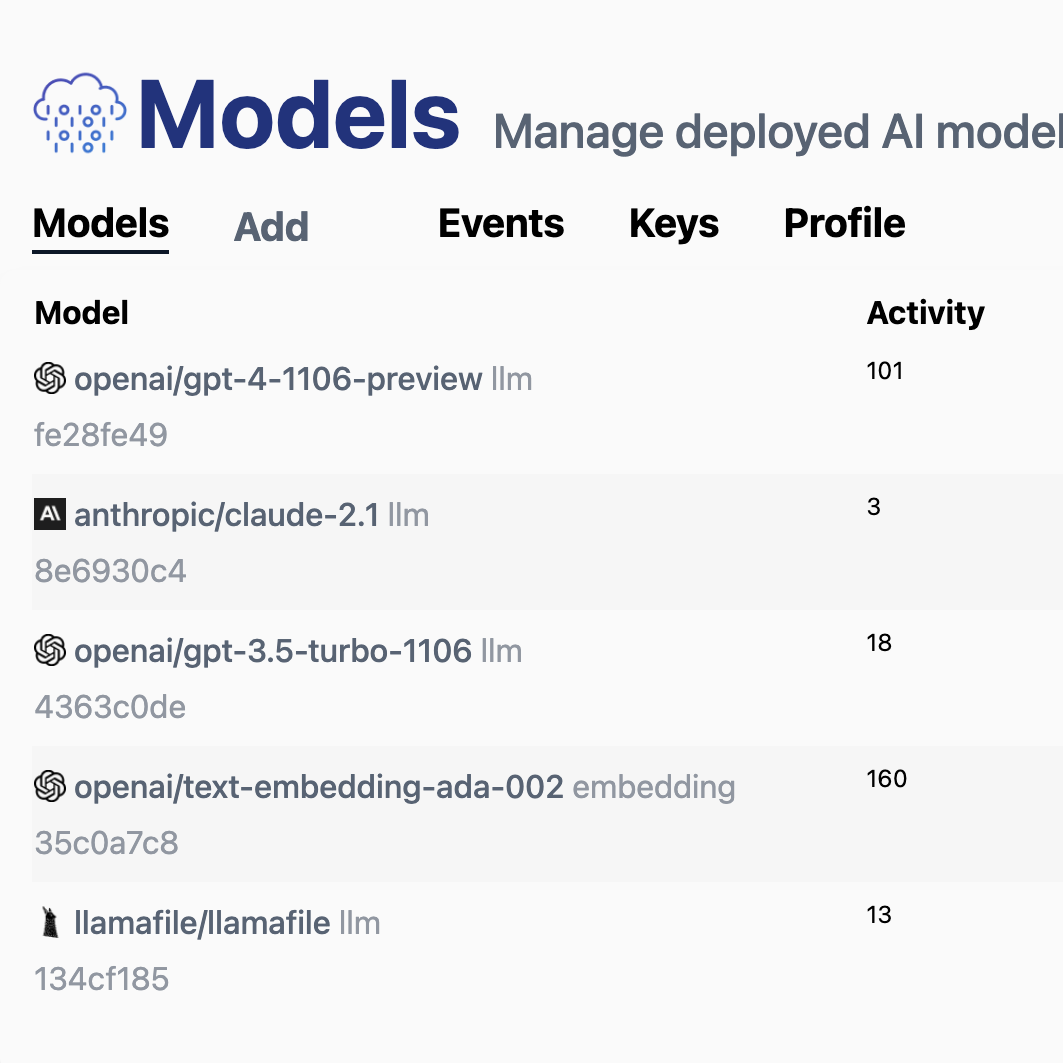

Model Deployer is single API you can use in production to access your AI models

Model Deployer is the fastest way to use multiple LLM models in production, with history, usage, rate limiting and more.

It was created from another project, LLM.js

LLM.js was created to give people control over how they use AI models in their apps.

It’s great to use GPT-4 and Claude, but it sucks to get locked in. And it’s hard to use local models.

LLM.js solves these problems, by creating a single simple interface that works with dozens of popular models.

As great as it is, it doesn’t fully solve the problem.

Bundling an app with a local model is not practical, the binaries are hundreds of MBs or even GBs.

Downloading the model on first start also isn’t practical. Some users will patiently sit through this, but most won’t. The first 10 seconds mean everything on a new app—making users wait will not work.

How do you offer the power of server models, with the flexibility of local open-source models?

Model Deployer is the solution. It’s an open-source server to manage models for your users. It trades a little bit of privacy for user experience.

It supports almost all popular Large Language Models.

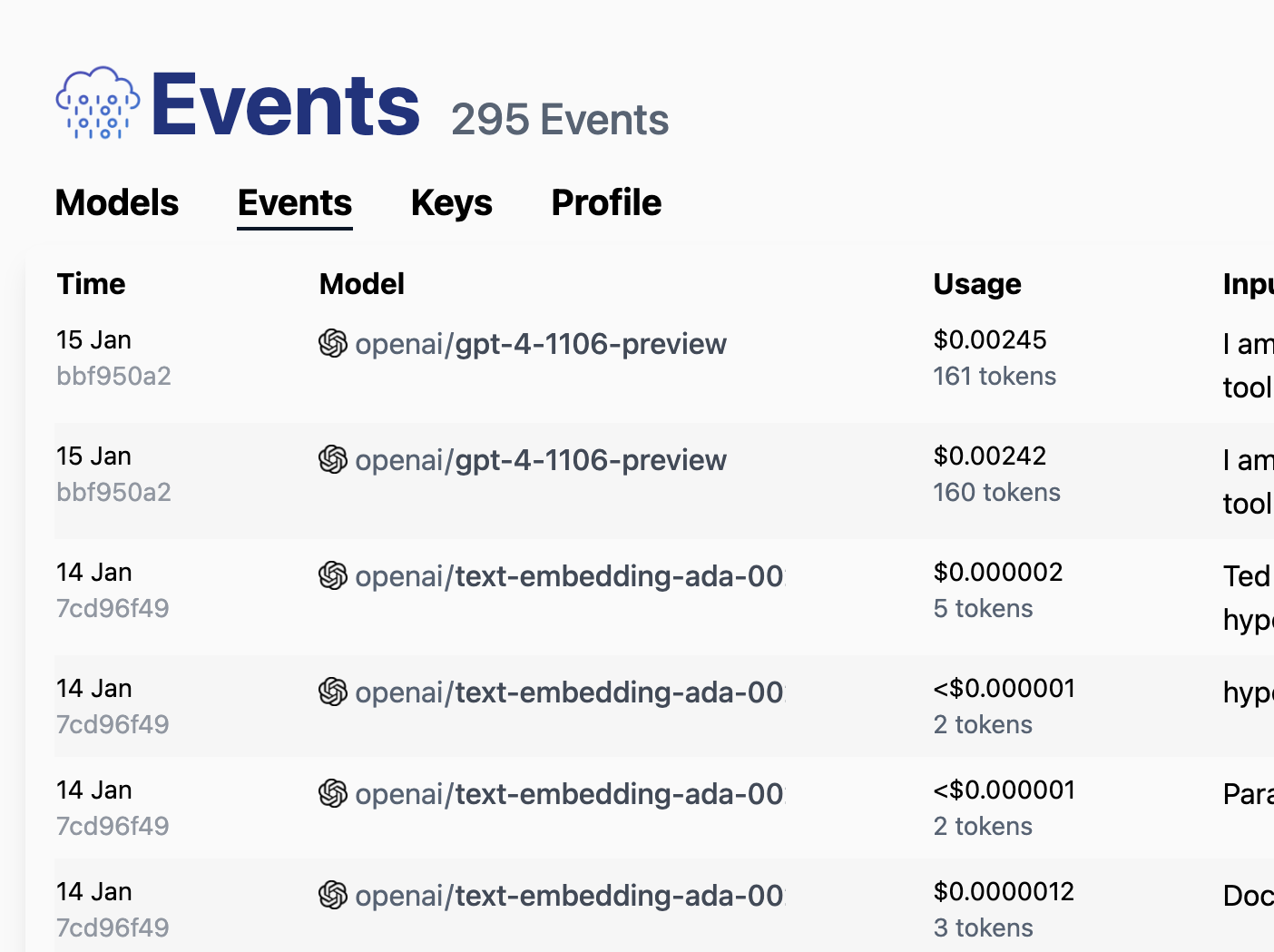

And it lets you track history and monitor usage for each API call

Importantly, it’s built on a 100% open stack. So for users who care, there are ways to go fully local, and self hosted. Or you can use ModelDeployer.com to access APIs instantly.

This accomplishes the best of both worlds. High-quality AI models, with the flexibility of privacy and local models.

← Back to blog