Model Deployer

An API for all your AI models

OpenAI

gpt-4-turbo-preview

The best and fastest LLM on the market.

gemini-pro

The best LLM from Google

Anthropic

claude-2.1

Best LLM for large context windows

Mistral

mistral-medium

The best LLM from Mistral

OpenAI

gpt-4

The best LLM on the market.

Llamafile

Mixtral-8x7B-Instruct

One of the best open-source LLMs

Anthropic

claude-2.0

The LLM with best value for large context windows

OpenAI

gpt-3.5-turbo

The best value LLM on the market.

Mistral

mistral-small

The more efficient LLM from Mistral

Anthropic

claude-instant-1.2

The fastest LLM with the largest context window

Mistral

mistral-tiny

Most efficient LLM from Mistral

- A single API for dozens LLMs and Embeddings

- Supports OpenAI, Anthropic, Mistral, Llamafile and more

- Track API usage for individual users

- Charge users by usage or subscription

- Rate-limit users

- Offer free faucet for new users

- Allow your users to provide their own API key

Model Deployer turns AI models into production-ready APIs.

Any app developer that wants to use AI models in their apps has a few questions to answer:

- How do you rate limit users and prevent someone from running up your bill?

- Do you charge? Subscription? Usage? Flat fee?

- Do you have a free tier?

- Can your customers provide their own API keys?

- Can your customers use their own models?

Model Deployer solves these problems of using AI models in your app.

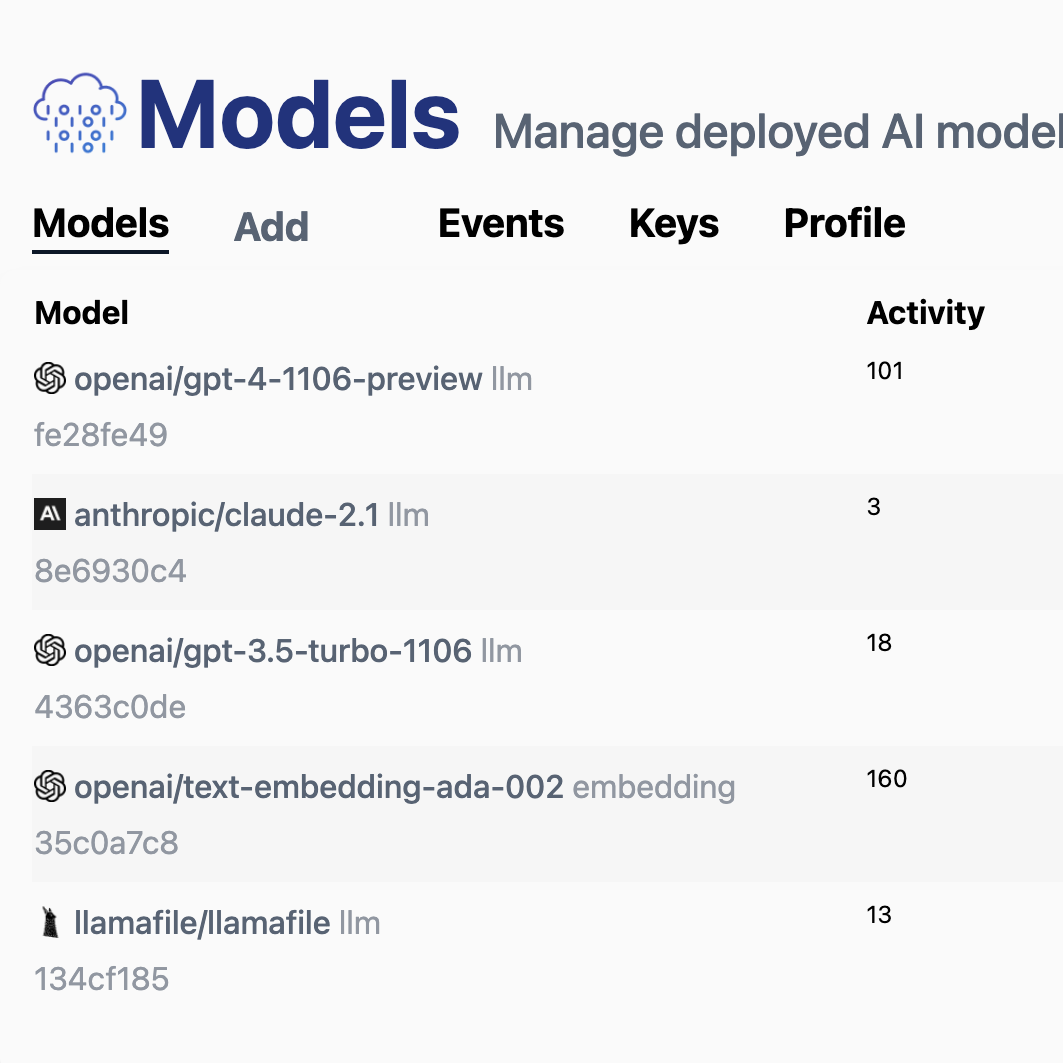

Manage AI models

Track history, monitor usage and rate limit users in production

Flexible Billing

Give your users the option: monthly subscription, usage based, provide their own API key, or run their own model

Best Large Language Models

All of the major LLMs are supported in a single interface

Text Similarity Embeddings

Multiple Embedding Models are supported in a single interface.

Don't get locked in

Switch between providers with a one-line config change—no code changes necessary.

Open Source

Model Deployer is 100% open-source under the MIT license

AI models are great, but using them in your app can be tricky because they aren't free! If you run a high-magin SaaS business, you can probably fold it into your costs.

But all kinds of user-facing apps struggle with the economics of using expensive AI models.

Model Deployer solves these problems, by making it easy to use lots of different models in the same interface, tracking their usage, rate limiting, and offering ways to charge your users.

But we've gone even further, because Model Deployer is 100% MIT open-source software, and you can run it yourself for your users. Your users can even run it for maximum privacy and flexibility.

Model Deployer gives you and your users choices

Host Model Deployer on your servers and manage which AI models you support.

Let your users run their own Model Deployer locally, for maximum privacy.

Model Deployer makes deploying AI models in production easy

Deploy and manage popular Large Language Models

Model Deployer makes it easy to deploy dozens of popular AI models in production.

Specify specific settings like temperature, max_tokens, rate limit and and schema—or override them on the client.

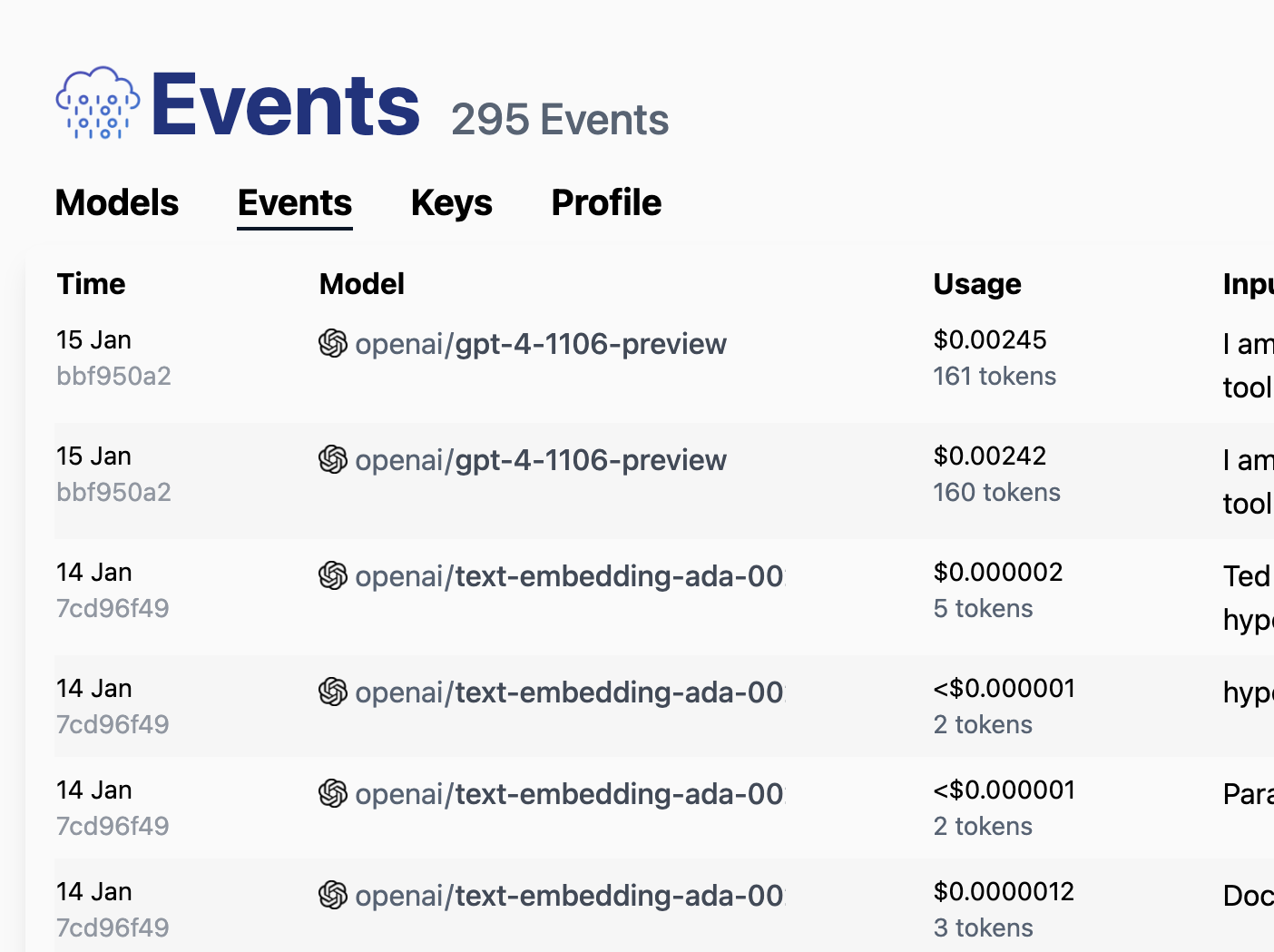

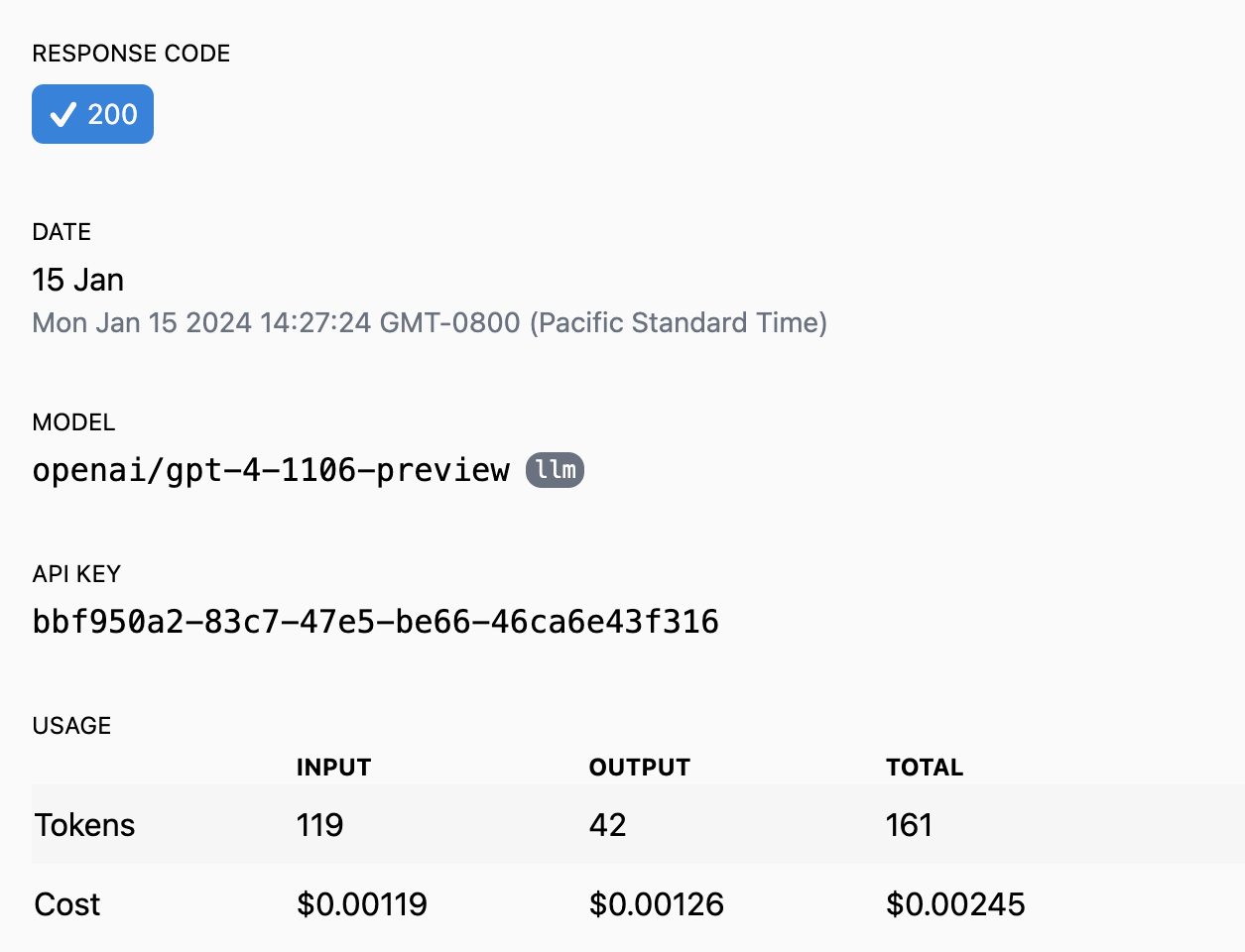

Manage history and costs

Quickly compare cost, usage and history across all your models and API keys.

Find problems, track opportunities and optimize costs with Model Deployer's event request history.

Detailed event log

Every request to your model is tracked, including the request, response and options.

This gives you a detailed log of everything happening with your AI models.

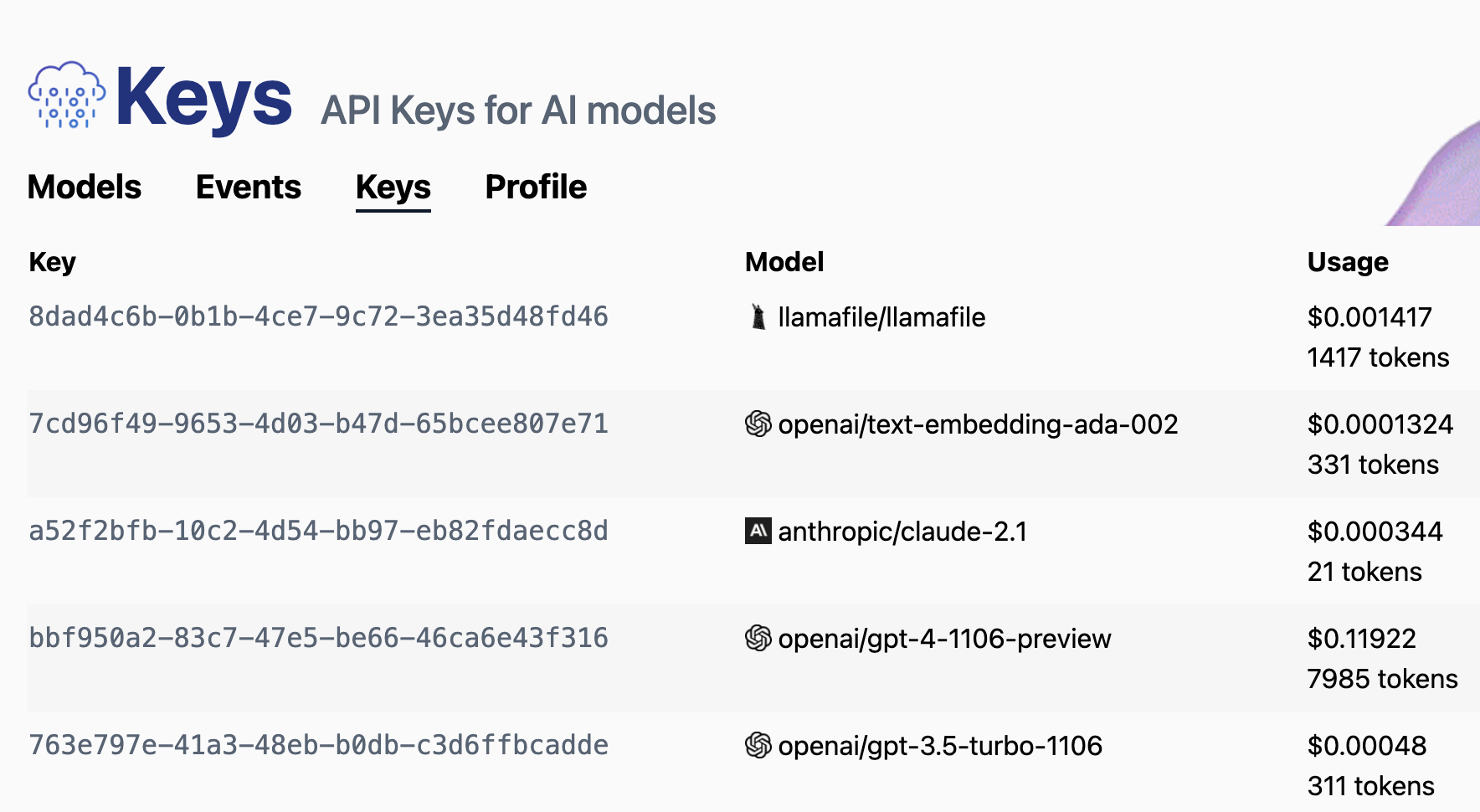

Manage API Keys

API Keys let you setup custom models, configs, rate limits, defaults and more.

Easily track costs and rotate API keys for users in your app.

Whether it's setting up a new model, quickly switching between them in your app, rate limiting users, tracking history or managing costs—Model Deployer has what you need to serve AI models in production.

Get Started

Start using Model Deployer to manage AI models in your apps

Self Host

- Run Model Deployer on your server

- Configure which models are enabled

- Add new local models with Llamafile

- Track usage

- Rate limit users

Model Deployer

- Instantly access dozens of AI models (LLMs, Embeddings)

- Switch providers with a click

- Track usage

- Rate limit users

- Charge users based on flexible billing model